SPIKE System Overview

This document provides an overview of SPIKE, a SPIFFE-native Secrets Management solution. It is designed to ensure secure storage, recovery, and management of sensitive data with a focus on simplicity, reliability, and scalability for production environments.

SPIKE Components

SPIKE (Secure Production Identity for Key Encryption) is a Secrets Manager built on top of a SPIFFE (Secure Production Identity Framework for Everyone) identity control plane, consisting of three components:

- SPIKE Nexus (

./nexus): The secrets store - SPIKE Pilot (

./spike): The CLI - SPIKE Keeper (

./keeper): The redundancy mechanism - SPIKE Boostrap: (

./bootstrap): Securely initializes SPIKE Nexus with the required crypto material without human intervention.

The system provides high availability for secret storage with a manual recovery mechanism in case of irrecoverable failure.

Here is an overview of each SPIKE component:

SPIKE Nexus

- SPIKE Nexus is the primary component responsible for secrets management.

- It creates and manages the root encryption key.

- It handles secret encryption and decryption.

- It syncs the root key’s Shamir Shards with SPIKE Keeperss. These shards then can be used to recover SPIKE Nexus upon a crash.

- It provides an RESTful mTLS API for secret lifecycle management, policy management, admin operations, and disaster recovery.

SPIKE Keeper

- It is designed to be simple and reliable.

- It does one thing and does it well.

- Its only goal is to keep a Shamir Shard in memory.

- By design, it does not have any knowledge about its peer SPIKE Keepers, nor SPIKE Nexus. It doesn’t require any configuration to be brought up. This makes it simple to operate, replace, scale, replicate.

- It enables automatic recovery if SPIKE Nexus crashes.

Since SPIKE Keeper only contains a single shard, its compromise will not compromise the system.

The more keepers you have, the more reliable and secure your SPIKE

deployment will be. We recommend 5 SPIKE Keeper instances with a

shard-generation threshold of 3, for production deployments.

Check out SPIKE Production Hardening Guide for more details.

SPIKE Pilot

- It is the CLI to the system (i.e., the

spikebinary that you see in the examples). - It converts CLI commands to RESTful mTLS API calls to SPIKE Nexus.

SPIKE Pilot is the only management entry point to the system. Thus, deleting/disabling/removing SPIKE Pilot reduces the attack surface of the system since admin operations will not be possible without SPIKE Pilot.

Similarly, revoking the SPIRE Server registration of SPIKE Pilot’s SVID (once SPIKE Pilot is no longer needed) will effectively block administrative access to the system, improving the overall security posture.

SPIKE Bootstrap

- It is a one-time initialization component that runs during system setup.

- It generates a cryptographically secure random root key.

- It splits the root key into Shamir shards and distributes them to the configured SPIKE Keeper instances.

- It verifies that SPIKE Nexus has successfully initialized by performing an end-to-end encryption test.

SPIKE Bootstrap is designed to run once per deployment. In Kubernetes

environments, it uses a ConfigMap to track whether bootstrap has completed,

preventing duplicate initialization. In bare-metal deployments, it runs each

time unless explicitly skipped.

This separation of concerns keeps SPIKE Nexus’s initialization flow simple: SPIKE Nexus always polls SPIKE Keepers for shards, while SPIKE Bootstrap handles the initial key generation and distribution.

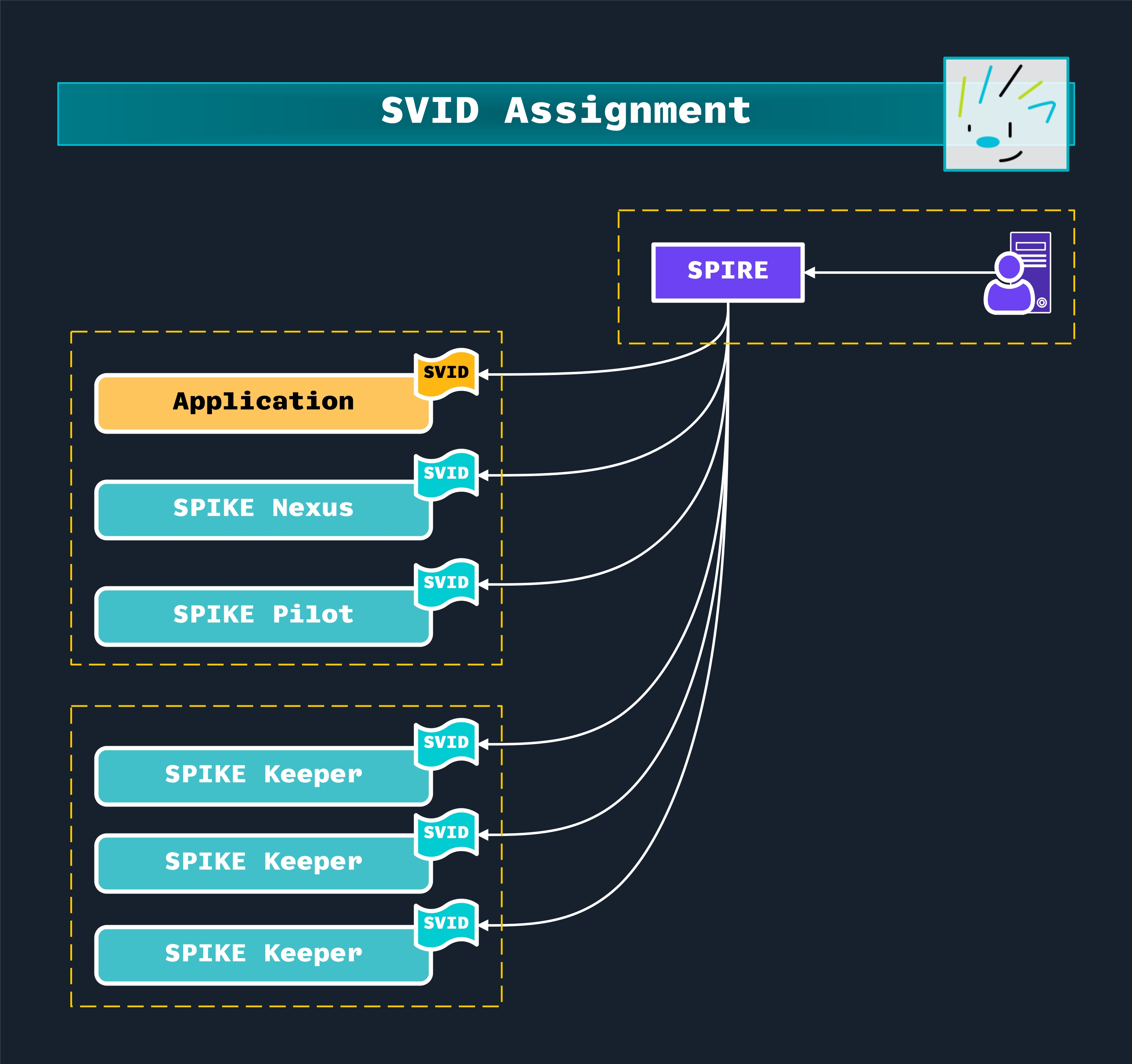

Identity Control Plane

The following diagram shows how SVIDs are assigned to SPIKE components and other actors in the system. SVIDs, or SPIFFE-Verifiable Identity Documents, are x.509 Digital Certificates, that contain a SPIFFE ID in their SAN (Subject Alternative Name)

The following diagram illustrates how SPIFFE identities are distributed across different SPIKE system components using SPIRE as the identity control plane.

Establishing the Identity Control Plane.

In a SPIKE deployment, SPIRE acts as the central authority that issues SVIDs to different workloads:

- Applications who need to manage secret lifecycles stored in SPIKE Nexus.

- SPIKE Infrastructure components:

- SPIKE Nexus

- SPIKE Pilot

- Multiple SPIKE Keeper instances

- SPIKE Bootstrap, to be executed once during system bootstrapping.

Each component receives its own SVID, which serves as a cryptographically verifiable identity document. These SVIDs allow the components to:

- Prove their identity to other services

- Establish secure, authenticated mTLS connections

- Access resources they’re authorized to use

- Communicate securely with other components in the system

The dashed boxes represent distinct security and deployment boundaries. SPIRE provides identity management capabilities that span across these trust boundaries. This architecture allows administrative operations to be performed on a hardened, secured SPIRE Server instance (shown in the top yellow box). This restricts direct access to sensitive operations (like creating SPIRE Server registration entries) from users and applications located in other trust boundaries.

Zero Trust FTW!

The approached described here is a common pattern in zero-trust architectures, where every service needs to have a strong, verifiable identity regardless of its network location.

This approach is more secure than traditional methods like shared secrets or network-based security, as each workload gets its own unique, short-lived identity that can be automatically rotated and revoked if needed.

Builtin SPIFFE IDs

SPIKE Nexus recognizes the following builtin SPIFFE IDS:

spiffe://$trustRoot/spike/pilot/role/superuser: Super Adminspiffe://$trustRoot/spike/pilot/role/recover: Recovery Adminspiffe://$trustRoot/spike/pilot/role/restore: Restore Admin

You can check out the **Administrative Access section of SPIKE security model for more information about these roles.

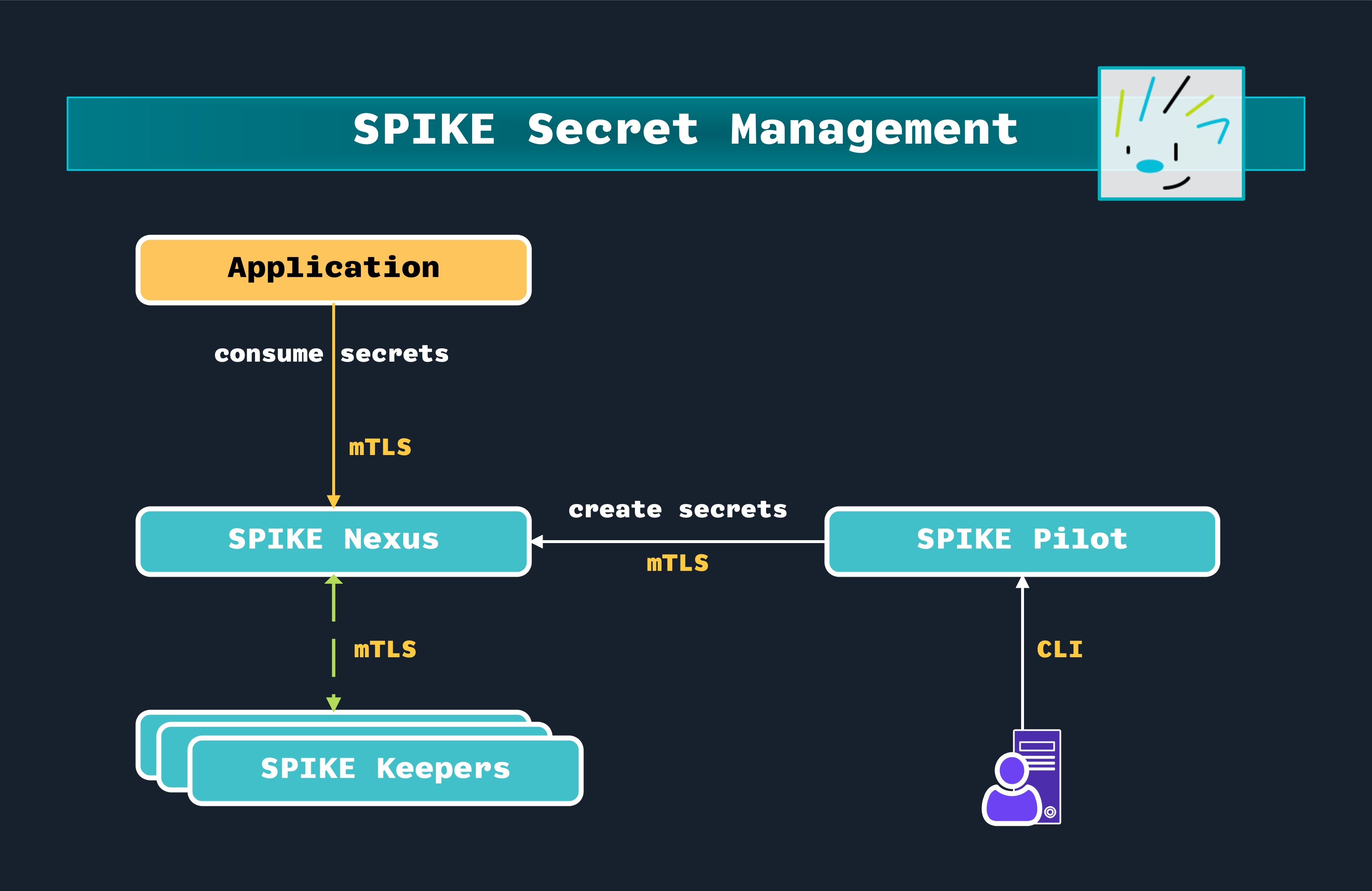

SPIKE Component Interaction

The following diagram depicts how various SPIKE components interact with each other:

Secret Management in SPIKE.

At the top level, there’s an Application that consumes secrets through an mTLS (mutual TLS) connection to SPIKE Nexus. The application will likely use the SPIKE Developer SDK to consume secrets without having to implement the underlying SPIFFE mTLS wiring.

The secrets are created/managed through:

An administrative user interacting with SPIKE Pilot through a command line

interface (the CLI is the spike binary itself).

Then, SPIKE Pilot communicates with SPIKE Nexus over mTLS to create secrets.

SPIKE Nexus is the central management point for secrets. It’s our secrets store.

At the bottom of the diagram, multiple SPIKE Keepers connect to SPIKE Nexus via mTLS. Each SPIKE Keeper holds a single Shamir Secret Share (shard) of the root key that SPIKE Nexus maintains in memory.

This design ensures that compromising any individual SPIKE Keeper cannot breach the system, as a single shard is not enough to reconstruct the root key.

The system’s security can be tuned by configuring both the total number of SPIKE Keepers and the threshold of required shards needed to reconstruct the root key.

During system bootstrapping, SPIKE Nexus distributes these shards to the SPIKE Keepers. If SPIKE Nexus crashes or restarts, it automatically recovers by requesting shards from a threshold number of healthy SPIKE Keepers to reconstruct the root key.

This mechanism provides automatic resiliency and redundancy without requiring manual intervention or “unsealing” operations that are common in other secret management solutions.

The system’s security and availability can be tuned by configuring both the total number of SPIKE Keepers and the threshold of required shards needed to reconstruct the root key. This flexibility allows implementors to balance their security requirements against operational needs–from basic redundancy to highly paranoid configurations requiring many SPIKE Keepers to be healthy.

Both the individual shards and the assembled root key are exclusively held in memory and NEVER persisted to disk, forming a core aspect of SPIKE’s security model.

The system uses mTLS (mutual TLS) throughout for secure communication between components, which ensures:

- All communications are encrypted

- Both sides of each connection authenticate each other

- The system maintains a high level of security for secret management

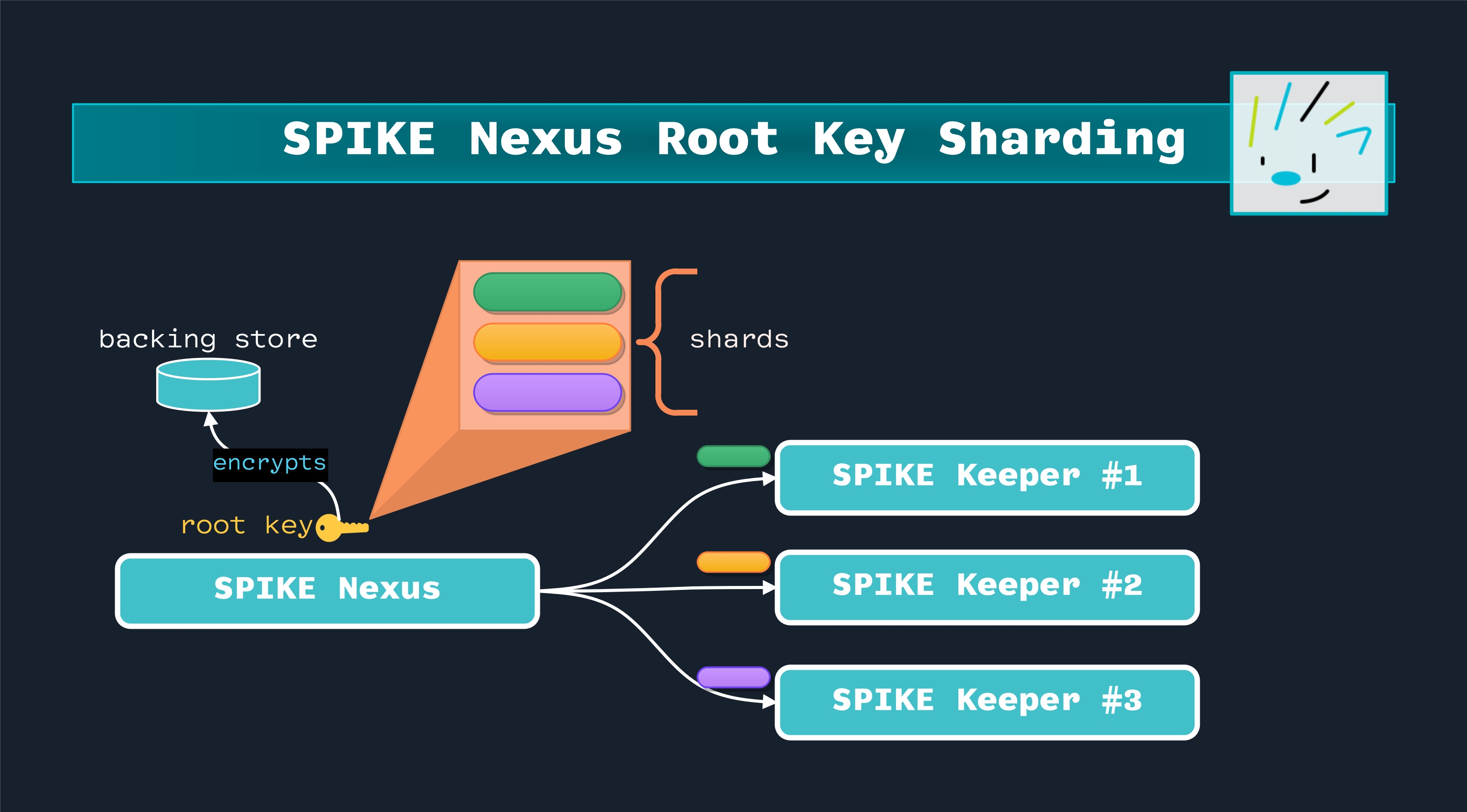

SPIKE Nexus Root Key Sharding

The following diagram shows how the SPIKE Nexus root key is split into shards and then delivered to SPIKE Keepers:

Secret Nexus root key sharding.

The SPIKE Nexus has a root key that’s essential for encrypting the backing store. This root key is split into Shamir shards based on a configurable number and threshold. There should be as many keepers as the created shards.

The key advantage of using Shamir sharding specifically (versus other forms of key splitting) is that it’s mathematically secure: The shards are created using polynomial interpolation, meaning:

- Each shard contains no meaningful information about the original key by itself

- You need a threshold number of shards to reconstruct the key

- The system can be configured to require any M of N shards to reconstruct the root key (e.g., any 2 of 3, or 3 of 5, etc.)

This provides both security and fault tolerance: The system can continue operating even if some SPIKE Keepers become temporarily unavailable, as long as the threshold number of shards remains accessible.

SPIKE Bootstrap Flow

The following diagram depicts the SPIKE Bootstrap flow, where SPIKE Keepers receive their shards for SPIKE Nexus to use. Open the picture on a new tab for an enlarged version of it.

SPIKE Bootstrap flow.

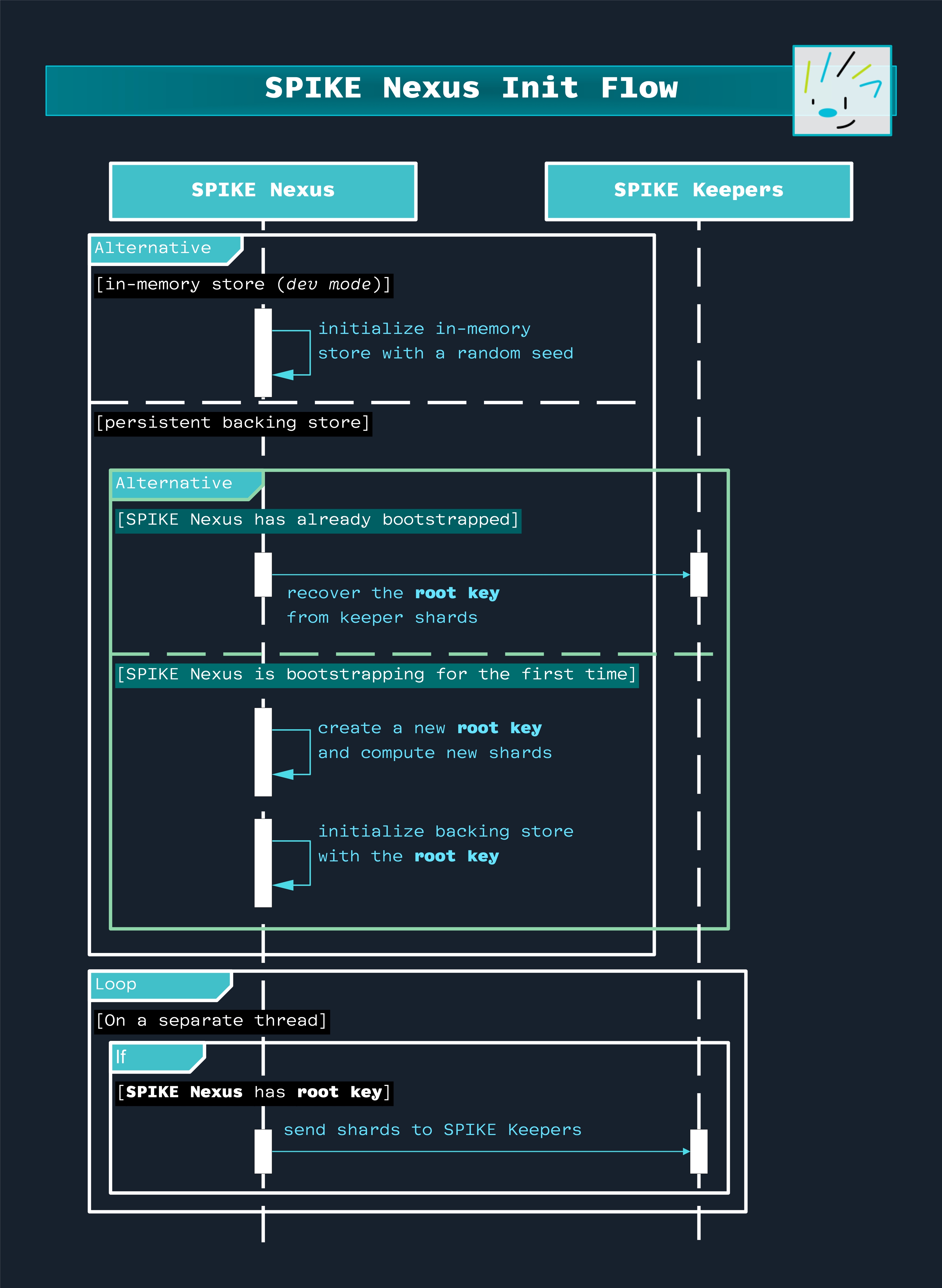

SPIKE Nexus Initial Bootstrapping

The following diagram depicts SPIKE Nexus initial bootstrapping flow.

SPIKE Nexus initialization.

When SPIKE Nexus is configured to use an in-memory backing store, we don’t need SPIKE Keepers because the database is in SPIKE Nexus’s memory and there is nothing to recover if SPIKE Nexus crashes. This is a convenient setup to use for development purposes.

When SPIKE Nexus is configured to use a persistent backing store (like SQLite), it does not generate the root key itself. Instead, SPIKE Nexus always polls SPIKE Keepers to collect enough shards to reconstruct the root key. This polling continues indefinitely until the threshold number of shards is collected.

The root key is generated by a separate component: SPIKE Bootstrap. When SPIKE Bootstrap runs, it generates a secure random root key, splits it into Shamir shards, and distributes those shards to the configured SPIKE Keeper instances. This separation of concerns keeps SPIKE Nexus’s initialization flow simple and predictable.

SPIKE Nexus Updating SPIKE Keepers

In addition, there is an ongoing operation that runs as a separate goroutine inside SPIKE Nexus:

- At regular intervals, if SPIKE Nexus has a root key, it computes Shamir shards and dispatches them to the SPIKE Keepers. This ensures that the shards remain synchronized even if individual SPIKE Keepers restart.

SPIKE Nexus updating SPIKE Keepers.

This flow establishes a secure boot process: SPIKE Bootstrap handles the initial key generation and distribution, while SPIKE Nexus focuses solely on recovering the root key from SPIKE Keepers whenever it starts.

The following state diagram illustrates how each of these recovery and restoration steps relate to the existence of the root key* in memory.

SPIKE Nexus Root Key state diagram.

SPIKE “break-the-glass” Disaster Recovery

There is one edge case, though: When there is a total system crash, and SPIKE Keepers don’t have any shards in their memory, then you’ll need a manual recovery.

This event is highly unlikely, as deploying a sufficient number of SPIKE Keepers with proper geographic distribution significantly reduces the probability of them all crashing simultaneously. Since SPIKE Keepers are designed to operate independently and without requiring intercommunication, failures caused by systemic issues are minimized. By ensuring redundancy across diverse geographic locations, even large-scale outages or localized failures are highly improbable to impact all SPIKE Keepers at once.

That being said, unexpected failures can occur, and the disaster recovery procedure for these situations.

Need a Runbook?

The SPIKE Recovery Procedures page contains step-by-step instructions to follow during, before, and a disaster occurs.

You will need to prepare beforehand so that you can recover the root key when the system fails to automatically recover it from SPIKE Keepers.

The following diagram outlines creating recovery shards for SPIKE Nexus

before a disaster strikes, while the system is healthy. The operator leverages

spike operator recover command to create the shards. You can open the picture

on a new tab for an enlarged version of it.

SPIKE Manual disaster recovery flow.

And the following diagram outlines how you can use spike operator restore

command to restore SPIKE Nexus back to its working state after a disaster.

You can open the picture on a new tab for an enlarged version of it.

SPIKE Nexus manual restoration flow.

Preventive Backup

Run

spike recoveras Soon as You CanYou must back up the root key shards using

spike recoverBEFORE a disaster strikes.This is like having a spare key stored in a safe place before you lose your main keys. Without this proactive backup step, there would be nothing to recover from in a catastrophic failure.

This operation needs to be done BEFORE any disaster; ideally, shortly after deploying SPIKE.

Here is how the flow goes:

- The Operator runs

spike recoverusing SPIKE Pilot. - SPIKE Pilot saves the recovery shards on the home directory of the system/

- The Operator encrypts and stores these shards in a secure medium, and securely

erases the copies generated as an output to

spike recover.

When later recovery is needed, the Operator will provide these shards to SPIKE to restore the system back to its working state.

Disaster Recovery

When disaster strikes:

- SPIKE Nexus and SPIKE Keepers have simultaneously crashed and restarted.

- SPIKE Nexus has lost its root key.

- SPIKE Keepers don’t have enough shards.

- Thus, automatic recovery is impossible and the system requires manual recovery.

In that case, the Operator uses spike restore to provide the previously

backed-up shards one at a time

- SPIKE Pilot forwards the entered shard to SPIKE Nexus

- System acknowledges and tracks the progress of shard restoration, returning the number of shards received, and the number of shards remaining to restore the root key.

System Restoration

Once enough shards are provided, SPIKE Nexus reconstructs the root key.

A separate goroutine redistributes shards to SPIKE Keepers and the System returns to normal operation.

Want More Pretty Pictures?

The diagrams above have been simplified for clarity. You can find more detailed

ones in the diagrams folder of the SPIKE GitHub repository.